In some situations, it may be a legitimate choice to permit only one user at a time. For example, when there is a small number of end users, or just a single user. In other situations, it may be acceptable to allow simultaneous users to perform updates, and just ignore the corruption that can arise. For example, in computer games, corruption might be so small that players don’t notice.

However, the vast majority of real-world situations require accurate preservation of data. Viewer counts should be accurate. Bank balances should not accidentally lose money. Documents should not disappear. Paid orders should not get lost.

From the end-user perspective, systems should work as though their actions are processed individually, without interference from other users.

Ideally, a similar experience should be available to software developers. I would like to write code and let the computer ‘figure out’ how to make it perform well with multiple users.

We come to the critical question of this section: can code execute as though there is only a single user, even though there may be thousands of concurrent users?

Serializability

A more precise formulation of the question comes from an understanding of serializability.

First, the term serial refers to one-at-a-time execution. A single-user system is a way to enforce serial execution. A publish/subscribe or event queuing architecture is another way to enforce serial execution (see Chapter 10): each requested operation is queued up and then processed one-by-one by a single subscriber.

The trouble with serial execution is that it is slow to wait for one-at-a-time execution.

The term serializability is a generalization of serial execution. A multiuser-system can achieve higher throughput by carefully interleaving processing of requests while ensuring that the overall result of any calculation is equivalent to some serial execution.

Suppose we have a bank that performs the following steps during a transfer of money from x to y:

-

Read the source balance (Read x)

-

Compute the reduced balance (Compute x' = x - t)

-

Save the updated source balance (Write x')

-

Read the destination balance (Read y)

-

Compute the increased balance (Compute y' = y + t)

-

Save the updated destination balance (Write y')

Now consider two bank transfers. Suppose we want to transfer $1 from Alice (a) to Bobby (b), and $1 from Alice (a) to Carol (c). Assume that each starts at $100.

Here is one serial execution:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read b (100) |

|

|

5 |

Compute b' (101) |

|

|

6 |

Write b' (101) |

|

|

7 |

Read a (99) |

|

|

8 |

Compute a' (98) |

|

|

9 |

Write a' (98) |

|

|

10 |

Read c (100) |

|

|

11 |

Compute c' (101) |

|

|

12 |

Write c' (101) |

As expected, the result is that Alice (a) has $98, while Bobby (b) and Carol (c) have $101 each.

Another serial execution might perform the operations in a separate order:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read c (100) |

|

|

5 |

Compute c' (101) |

|

|

6 |

Write c' (101) |

|

|

7 |

Read a (99) |

|

|

8 |

Compute a' (98) |

|

|

9 |

Write a' (98) |

|

|

10 |

Read b (100) |

|

|

11 |

Compute b' (101) |

|

|

12 |

Write b' (101) |

As expected, even though the execution reverses the order of the two requests, the result is the same: Alice (a) has $98, while Bobby (b) and Carol (c) have $101 each.

Now, suppose the serial execution is no longer required, and one operation from each request overlaps:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read c (100) |

|

|

5 |

Compute c' (101) |

|

|

6 |

Read a (99) |

Write c' (101) |

|

7 |

Compute a' (98) |

|

|

8 |

Write a' (98) |

|

|

9 |

Read b (100) |

|

|

10 |

Compute b' (101) |

|

|

11 |

Write b' (101) |

The result is the same. Assuming that the system can simultaneously read from a and write to c in timestep 6, the overlap has potentially saved one timestep.

How much could these requests overlap without changing the result? Here is another execution trace that saves even more time:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read a (99) |

Read c (100) |

|

5 |

Compute a' (98) |

Compute c' (101) |

|

6 |

Write a' (98) |

Write c' (101) |

|

7 |

Read b (100) |

|

|

8 |

Compute b' (101) |

|

|

9 |

Write b' (101) |

While this trace is not serial, it is serializable because the result is equivalent to a serial execution.

Here is another serializable execution trace:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read a (99) |

Read c (100) |

|

5 |

Compute a' (98) |

|

|

6 |

Write a' (98) |

|

|

7 |

Read b (100) |

Compute c' (101) |

|

8 |

Compute b' (101) |

|

|

9 |

Write b' (101) |

Write c' (101) |

And here is another serializable execution trace:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read a (99) |

|

|

5 |

Compute a' (98) |

|

|

6 |

Write a' (98) |

|

|

7 |

Read b (100) |

Read c (100) |

|

8 |

Compute b' (101) |

Compute c' (101) |

|

9 |

Write b' (101) |

Write c' (101) |

As we saw in the previous section, some traces are not serializable:

|

Timestep |

Alice to Bobby |

Alice to Carol |

|---|---|---|

|

1 |

Read a (100) |

|

|

4 |

Read a (100) |

|

|

5 |

Compute a' (99) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

6 |

Write a' (99) |

|

|

7 |

Read b (100) |

Read c (100) |

|

8 |

Compute b' (101) |

Compute c' (101) |

|

9 |

Write b' (101) |

Write c' (101) |

In this non-serializable trace, $1 has gone missing: Alice (a) has $99, while Bobby (b) and Carol (c) have $101 each.

Transactions

It is easy to ensure serial execution: only do one thing at a time. But how can serializability be enforced?

One technology provided by database systems is the transaction. A transaction is a unit of work, consisting of potentially many individual operations. The database ensures that the transaction executes as though it runs in isolation.

More formally, a transaction is a unit of work to which the database guarantees atomicity, consistency, isolation and durability (ACID):

- Atomicity

-

The transaction is all-or-nothing. If something fails at any point, then every operation in the transaction is reversed or canceled. There should be no partially completed transactions. It should be impossible for other users to see a partially complete update. [1]

- Consistency

-

Any database or business constraints should hold before and after the transaction. The database ensures that foreign keys, uniqueness constraints, views and any other features update correctly as part of the atomic transaction. If an update would result in a constraint violation, the transaction must not succeed: it must be reversed or canceled. [2]

- Isolation

-

The transaction should run as though it is on its own. Transactions should be serializable: the result of running a transaction should be equivalent to some serial execution.

- Durability

-

The transaction must only succeed if it has been saved to a disk (or to backup servers) so that a power outage or system failure does not cause data loss. Durability means permanence: if the transaction succeeded, you can rely on the data not being accidentally lost.

Using transactions

Transactions are an important part of the standards implemented by SQL databases. In most database management systems, a transaction is started by executing START TRANSACTION. [3] After initiating the transaction, the database management system will monitor all queries and updates (SELECT, INSERT INTO, UPDATE and DELETE statements) to ensure that the ACID criteria hold. At any point, ROLLBACK will abort the transaction and all of its changes. To finish the transaction, executing COMMIT will cause the database to perform any final consistency checks and logging to ensure all of the ACID criteria. Should the commit fail (e.g., because the database cannot perform operations required to guarantee ACID), then the transaction will be reversed and the database will return an error.

In simple terms:

-

Start Transaction: Begin recording changes.

-

Commit: Permanently save all the changes.

-

Rollback: Undo all the changes.

The state diagram below depicts the flow:

In working code, all of the transaction operations must execute on the same database connection. On Node.js with the pg package and the PostgreSQL database, you should request a client from the connection pool, rather than performing the queries directly on the pool. [4]

For example, the following code that does not use a transaction:

// Perform updates

await pool.query(

`UPDATE balances SET balance = balance - 1 WHERE user = 'Alice'`

);

await pool.query(

`UPDATE balances SET balance = balance + 1 WHERE user = 'Bobby'`

);To obtain all the benefits of transactions, only slightly more work is required:

// Request a connection from the database connection pool

const client = await pool.connect()

try {

// Begin the transaction

await client.query('START TRANSACTION');

// Perform updates

await client.query(

UPDATE balances SET balance = balance - 1 WHERE user = 'Alice'

);

await client.query(

UPDATE balances SET balance = balance + 1 WHERE user = 'Bobby'

);

// Attempt to save the changes

await client.query('COMMIT')

} catch (e) {

// If something goes wrong, just abort the transaction

await client.query('ROLLBACK');

throw e;

} finally {

// It is important to ALWAYS return the connection back to the connection pool

// This stops the connection from being lost and the pool from running out of active connections

client.release();

}In practice, the ACID guarantees do incur a performance cost. Thus, it is important to minimize the use of transactions and limit their scope as much as possible. A transaction should not last more than a single request and should take as short a time as possible. Do not think of ROLLBACK as a way to add user-friendly ‘undo’ functionality to an application. Instead, think of it as a way of aborting mid-way through a change. If you would like to add undo capabilities to an application, it is better to treat undo as a separate reversing transaction (e.g., use a new transaction to subtract $1 from Bobby and return it to Alice).

Many NoSQL databases, such as MongoDB, do not provide or encourage the use of general-purpose transactions. MongoDB provides ACID guarantees at the level of individual requests. However, by default, MongoDB does not allow transactions to span multiple updates or multiple tables. [5]

How databases guarantee ACID

Database management systems use a range of internal implementation strategies to guarantee ACID properties in a database.

- Pessimistic approaches

-

Pessimistic approaches prevent operations that would violate ACID criteria. The database checks every potential change to ensure that it will be serializable. If necessary, the database may enforce serial execution by using a lock to delay an operation. Pessimistic approaches tend to be slow but rarely fail during

COMMIT. - Optimistic approaches

-

Optimistic approaches permit most operations, even if it is likely they will violate ACID criteria. The database allows all updates but guarantees ACID by performing a final verification during the

COMMIT. If the updates are not serializable, then the database will abort the transaction. The database uses versions and timestamps to check for serializability. Optimistic approaches tend to be much faster, but they are more likely to result in a failedCOMMIT. - Multiversion approaches

-

Multiversion concurrency control combines techniques of both pessimistic and optimistic approaches to improve performance or reduce the likelihood of aborted transactions. Multiversion approaches maintain multiple versions of the same data. Having multiple versions allows the database to simulate isolation by returning different results to the same queries in separate transactions. For example, the database can simulate serial execution by offering an older version of the database to one transaction, even though other transactions have made more recent changes to the database. Databases use snapshots (or shadow pages) to implement multiversion concurrency control.

In pessimistic systems, multiversion methods improve performance while increasing the chance of needing to abort a transaction. In optimistic systems, multiversion approaches will reduce performance but decrease the likelihood of needing to abort a transaction.

Locking

In computer science, a lock is a mechanism that restricts concurrent access to a resource. For example, when you are editing a document on your computer, the operating system will lock the file so that another program cannot simultaneously open it.

A single lock — one lock across the entire database — can enforce serial execution. Instead of using transactions, one exclusive lock could be acquired at the start of each request, and then released at the end of the request. Other concurrent requests must wait until the lock is released so that only one transaction will be making changes at a time. [6]

Production database systems use more fine-grained locks for higher performance. Instead of having a single lock for the entire database, a database can have locks for each table or even individual rows. For example, there might be separate locks for the balance of Alice’s account, Bobby’s account and Carol’s account. Reading Alice’s balance within a transaction will automatically cause the database to establish a lock on the balance of her account until the end of the transaction. A transaction transferring money from Marcia to Greg does not need to lock the balance of Alice, Bobby or Carol or Sam’s accounts. Because they don’t share the same locks, they run simultaneously without interfering with each other.

Production database systems can also use more flexible locks. A common technique is a read/write lock that allows multiple simultaneous readers or just one writer. A read/write lock provides higher performance if most operations involve reading lots of data or shared data, but where writes are comparatively rare.

Versioning and timestamping

Take a numbered serial execution of 5 transactions:

-

Transfer $1 from Alice to Bobby

-

Transfer $1 from Alice to Carol

-

Transfer $1 from Marcia to Greg

-

Transfer $1 from Alice to Sam

-

Transfer $1 from Bobby back to Alice

Now, suppose that, along with the bank accounts, the database stores the identifier of the last transaction that read or wrote the balance. In the table below, I depict the ‘last involved transaction’ in brackets.

|

Transaction |

Alice |

Bobby |

Carol |

Greg |

Marcia |

Sam |

|---|---|---|---|---|---|---|

|

100 (–) |

100 (–) |

100 (–) |

100 (–) |

100 (–) |

100 (–) |

|

|

#1 |

99 (#1) |

101 (#1) |

100 (–) |

100 (–) |

100 (–) |

100 (–) |

|

#2 |

98 (#2) |

101 (#1) |

101 (#2) |

100 (–) |

100 (–) |

100 (–) |

|

#3 |

98 (#2) |

101 (#1) |

101 (#2) |

101 (#3) |

99 (#3) |

100 (–) |

|

#4 |

97 (#4) |

101 (#1) |

101 (#2) |

101 (#3) |

99 (#3) |

101 (#4) |

|

#5 |

98 (#5) |

100 (#5) |

101 (#2) |

101 (#3) |

99 (#3) |

101 (#4) |

The numbers of the ‘last involved transaction’ only increase. Alice’s bank balance isn’t involved in every single transaction so there is no #3. However, the ‘last involved transaction’ never decreases: Alice’s balance follows the sequence #1, #2, #2, #4, #5.

This rule — that transactions identifiers only increase — is the principle of versioning and timestamping.

A database management system can allow multiple concurrent transactions. If the ‘last involved transaction’ (the timestamp) never decreases for any value in the database, then the operations will be serializable. If the database management system detects that a change would cause the timestamp to decrease, it will abort the transaction and expect the user (or the domain logic) to try again later.

As with locking, the performance of versioning can be also be increased by using separate read and write timestamps. Multiple reads of the same value can be performed out-of-order, provided that the writes are consistent with the read timestamps.

Snapshots

Snapshots provide additional flexibility to the database management system to assist with ensuring serializability. The database can keep track of multiple versions of the data. For example, suppose we are transferring Alice’s money to Bobby while calculating the total balance in all accounts:

|

Timestep |

Alice to Bobby |

Compute total |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read b (100) |

Read a (99) |

|

5 |

Compute b' (101) |

Read b (100) |

|

6 |

Write b' (101) |

At the start, the total was $200 (i.e., $100 + $100). At the end, the total was the same amount of $200 (i.e., $99 + $101). However, the total is the inconsistent result of $199 ($99 + $100): the transaction will believe that $1 is missing from the bank.

This problem could be solved using locks:

|

Timestep |

Alice to Bobby |

Compute total |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read b (100) |

Wait for lock on a |

|

5 |

Compute b' (101) |

Wait for lock on a |

|

6 |

Write b' (101) |

Wait for lock on a |

|

7 |

COMMIT |

Wait for lock on a |

|

8 |

Read a (99) |

|

|

9 |

Read b (101) |

The database lock will postpone the ‘Compute total’ until the Alice to Bobby transfer is complete.

The problem could also be solved using timestamps:

|

Timestep |

Alice to Bobby: Transaction #1 |

Compute total: Transaction #2 |

|---|---|---|

|

1 |

Read a (100, #1) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99, #1) |

|

|

4 |

Read b (100, #1) |

Read a (99, #2) |

|

5 |

Compute b' (101) |

Read b (100, #2) |

|

6 |

Write b' (Abort!) |

In step 6, the ‘Alice to Bobby’ transaction (#1) aborts because writing b' in step 6 would result in the timestamp decreasing from #2 to #1. The ‘Compute total’ transaction (#2) also needs to be aborted because its result depends on values from the, now aborted, Transaction #1.

Snapshots offer a better possibility. The database can store old and new versions of the same data:

|

Timestep |

Alice to Bobby |

Compute total |

|---|---|---|

|

1 |

Read a (100) |

|

|

2 |

Compute a' (99) |

|

|

3 |

Write a' (99) |

|

|

4 |

Read b (100) |

Read old a (100) |

|

5 |

Compute b' (101) |

Read old b (100) |

|

6 |

Write b' (101) |

The ‘Compute total’ transaction starts after changes have already been made to the database by the ‘Alice to Bobby’ transaction. However, the ‘Compute total’ transaction reads the old snapshots as if it were executing before ‘Alice to Bobby’ started. While this may seem slightly counterintuitive, note that serializability only means that the operations are equivalent to some serial execution. Serializability does not mean that the latest values are always read. In practice, your users will not notice. If two transactions are running simultaneously, users are unlikely to notice that the results are a few milliseconds out of date. However, the benefit is dramatic: snapshots make it possible to maintain serializability without any need to delay or abort either transaction.

Locks and versions in domain logic

Locking, versioning and snapshots are usually internal details of the transaction processing code of a database management system.

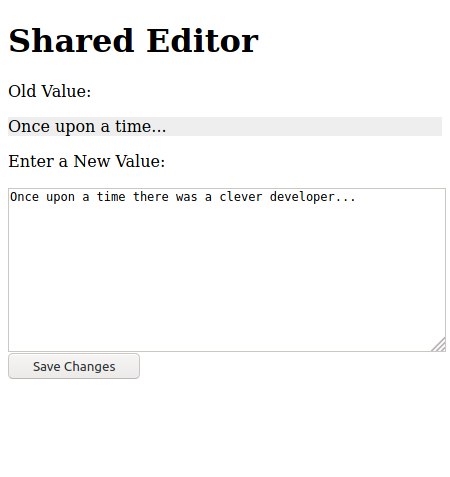

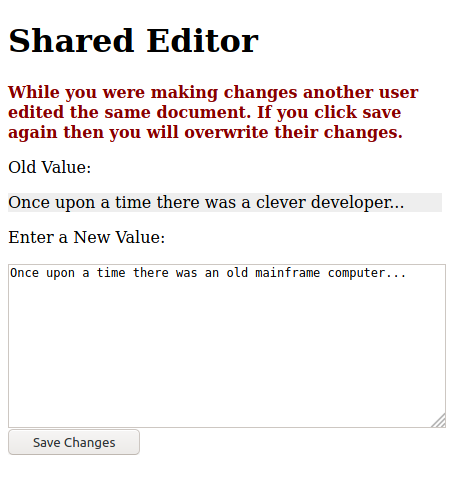

However, the same techniques can be useful when writing code for presentation or domain logic.

There are many situations where two or more people can edit the same data:

-

In a GitHub team, users with the ‘Admin’ role can change the settings of a repository

-

On Wikipedia, users can edit the same page

-

On Trello, users can edit a shared card

-

In most internal business systems, there are records such as contacts, products, orders, and invoices that may be accessed by sales staff, warehouse staff and management

There is a danger that two users simultaneously see and update the same values. As a simple example, imagine that you do an hour of work for each of two different managers at a large restaurant. By chance, the managers both happen to log in to the system at 5:35 pm on Saturday to add the extra hours to your existing count of 15 hours:

|

Time |

Manager 1 |

Manager 2 |

|---|---|---|

|

5:35 |

Sees your unpaid balance of 14 hours |

Sees your unpaid balance of 14 hours |

|

5:36 |

Quickly enters the additional hour: 15 hours |

|

|

5:37 |

Enters the additional hour: 15 hours |

As a result, instead of having 16 hours of pay owing to you, you end up with one hour missing.

Instead, it would be better to lock the record:

|

Time |

Manager 1 |

Manager 2 |

|---|---|---|

|

5:35 |

Sees your unpaid balance of 14 hours |

“Currently locked, please come back later” |

|

5:36 |

Quickly enters the additional hour: 15 hours |

|

|

5:39 |

Sees your unpaid balance of 15 hours |

|

|

5:41 |

Enters the additional hour: 16 hours |

Or, to detect the invalid update using versions:

|

Time |

Manager 1 |

Manager 2 |

|---|---|---|

|

5:35 |

Sees your unpaid balance of 14 hours |

Sees your unpaid balance of 14 hours |

|

5:36 |

Quickly enters the additional hour: 15 hours |

|

|

5:37 |

Enters the additional hour: 15 hours

|

|

|

5:38 |

Enters the additional hour: 16 hours |

The domain logic can enforce consistency by simulating transactions:

-

Locks can be created by adding additional fields: for example, a boolean value

isLocked. The transaction that retrieves the payment details can setisLockedto be true. When a later transaction updates the payment details,isLockedis set to false again. -

Versions can be created by adding an incrementing field. For example an integer

versionfield. The presentation logic can remember the version of the record that the user is currently changing. The presentation logic submits the change and old version number to the domain logic. The domain logic only updates the record if the version number in the database matches the version from the presentation logic (i.e., that no other user has simultaneously made a change, resulting in an update of the version field).

The latter approach (versions) is widespread in object-relational mapping and object-document mapping frameworks. Both Sequelize (through a version attribute) and Mongoose (through the version key) provide built-in optimistic concurrency control, in addition to the lower-level optimistic concurrency features already performed by the database management system.

Weaker forms of isolation

ACID is an ideal for transactions, but there is a significant performance penalty involved:

- Atomicity

-

The database must record transaction state and rollback information. It must track multiple versions of data.

- Consistency

-

The database must check and enforce potentially complex system constraints after every change.

- Isolation

-

Locks cause delays. Timestamps require retries.

- Durability

-

The database management system must wait for the disk drive to finish writing before it can confirm success.

However, these guarantees may not matter. What does your application really need?

-

Does it matter if your users see stale data or temporarily inconsistent data?

-

Does it matter if your users see slightly different things if they examine the same data?

-

Does it matter if you lose some data?

-

Does it matter if your users experience small delays?

-

Can you recover in other ways (e.g., by manual processing a refund) if something goes wrong?

For a business’s most critical data, the answer is probably a resounding “Yes! It does matter if we lose data!”. However, for non-critical data, users may prefer higher performance and overlook the occasional glitch (e.g., in online games, in social media ‘like’ counts, in informal messaging).

The SQL standards and most SQL databases offer reduced forms of isolation that do not guarantee full ACID or full serializability. Some phenomena that can occur at lower isolation levels include:

-

Dirty reads: transactions can see the intermediate values from other transactions that have not yet committed

-

Non-repeatable reads: repeated execution of the same read command resulting in different values in a single transaction

-

Phantom reads: repeated execution of the same query returning different rows even if performed within a single transaction

The default configuration of most commercial databases is a reduced isolation level. For example, in PostgreSQL, non-repeatable reads and phantom reads can occur unless you execute SET TRANSACTION ISOLATION LEVEL SERIALIZABLE command after starting each transaction.

MongoDB and other NoSQL databases also provide lower levels of isolation than full serializability.